Environment

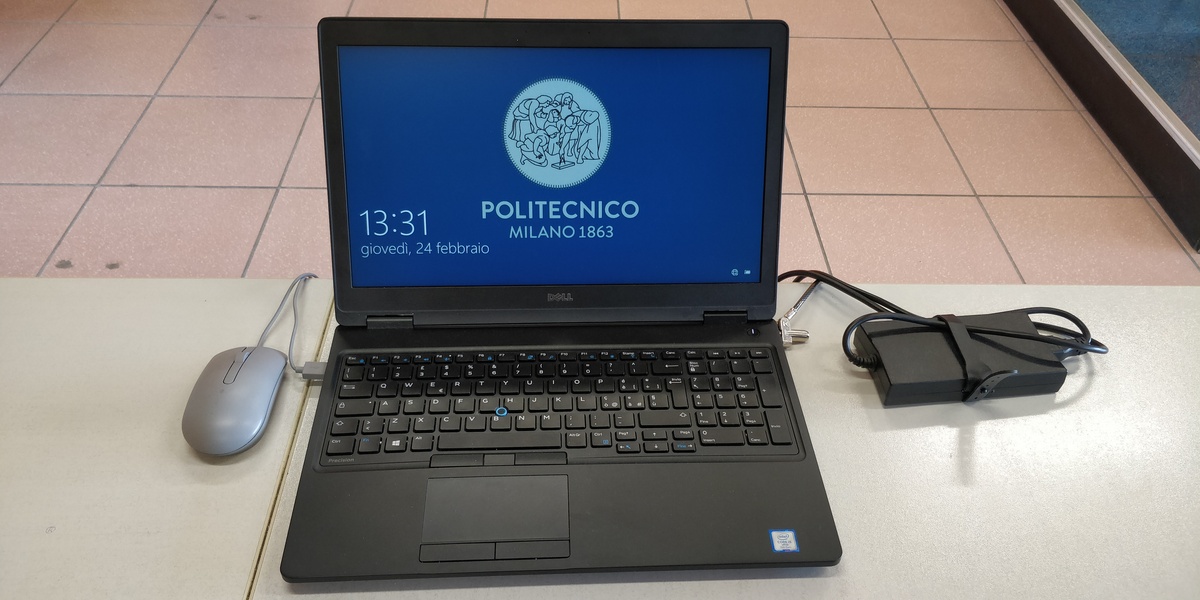

Team hardware

SWERC 2021-2022 will be onsite, and the computers for the contest are provided to the teams, and they will be randomly allocated.

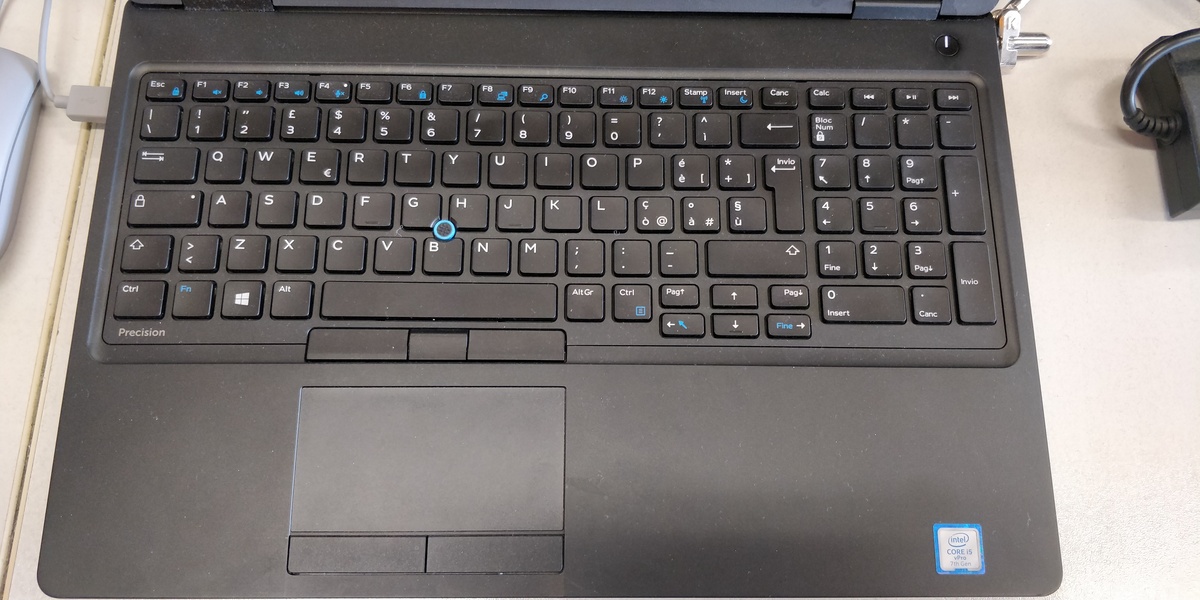

All the machines will be laptops with QWERTY keyboards with the Italian layout. Teams are not permitted to bring their own keyboards, but may put stickers on the keyboard if they wish; see regulations.

Of course, no matter the physical layout of the keyboards, it is always possible to reconfigure them in software to a different layout (that does not match what is printed on the keyboard).

All the laptops are Dell Precision 3520 15,6” with the following hardware:

- CPU: Intel i5-7440HQ

- Ram: 8gb

- Storage: 512 GB SSD NVMe

- GPU: Intel HD Graphics 630 + Nvidia Quadro M620 2Gb

Note that the Nvidia GPU will be disabled.

Team software

The software configuration of the team environment is described here.

- OS

- Ubuntu 20.04 LTS Linux (64-bit)

- Desktop

- GNOME 3

- Editors

- vi/vim

- gvim

- emacs

- gedit

- geany

- kate

- Atom

- Languages

- Java

- OpenJDK version 11

- C

- gcc

- C++

- g++

- Python 3

- PyPy

- Kotlin

- Note that Python 2 is not supported.

- Java

- IDEs

- IntelliJ IDEA Community Edition

- CLion

- PyCharm Community

- Eclipse IDE for Java Developers

- Eclipse IDE for Python Developers

- Visual Studio Code

- C/C++ extension by Microsoft

- Language Support for Java extension by Red Hat

- Python extension by Microsoft

- Vim by vscodevim (disabled by default)

- Apache NetBeans IDE

- Code::Blocks

- Debuggers

- gdb

- valgrind

- ddd

- Browsers

- Firefox

- Chromium

The exact version of the packages above will be published close to the contest, and they will the latest versions in the official Ubuntu 20.04 repositories, where applicable.

Teams may ask for more software to be installed by emailing us (at swerc.polimi@pm.me) no later than March 23rd. We will consider all the incoming requests, but we reserve to deny any request we consider not reasonable enough.

Update 09/04/2022: The first version of a Virtual Machine that looks similar to the contest environment has been published at https://vm.swerc.eu. This VM can be imported in Oracle Virtual Box, and can be used to test whether your favorite programs from the list above work as intended. Most of the programs will have no customization, apart from installing some plugins.

These are the known issues with the first version of the VM:

- The default resolution is 800x600 (can be changed right-clicking on the desktop).

- Eclipse is not installed.

- Visual Studio Code suggests installing additional extensions with some languages (can be safely ignored).

- Visual Studio Code doesn't have Kotlin support.

- In order to use the Vim plugin for Visual Studio Code, you should start the

[VIM] Visual Studio Codeapplication from the launcher. - When compiling, NetBeans tries to download maven packages from the Internet, which won't work offline.

- Code::Blocks has the wrong "terminal" configured, so it fails to launch the compiled programs (can be fixed setting gnome-terminal in the preferences).

- Code::Blocks debugger doesn't work.

If you find other issues you can contact us at swerc.polimi@pm.me. Consider that fixing problems during or after the practice session is a lot harder. Therefore, if you notice any issue don't hesitate to contact us immediately.

Update 20/04/2022: A second version of the Virtual Machine has been published at https://vm.swerc.eu.

- The terminal is fixed for code::blocks, GDB in code::blocks is now a wontfix

- The documentation+DOMjudge URL is set for the default browsers

- The default my* alias is converted to a shell script so they can be chosen as compiler in editors

- The machine will autologin on start of contest

Compilation flags

The judging system will compile submissions with all the following options.

In some cases, it may need to add additional flags to specify the path of the produced binary (e.g. -o ... for C/C++).

Each exists as an alias on the team machines:

| Language | Implementation | Command | Alias |

|---|---|---|---|

| C | gcc | gcc -x c -Wall -Wextra -O2 -std=gnu11 -static -pipe "$@" -lm |

mygcc |

| C++ | g++ | g++ -x c++ -Wall -Wextra -O2 -std=gnu++17 -static -pipe "$@" |

myg++ |

| Java | OpenJDK 11 | javac -encoding UTF-8 -sourcepath . -d . "$@" |

myjavac |

java -Dfile.encoding=UTF-8 -XX:+UseSerialGC -Xss128m -Xms1856m -Xmx1856m "$@" |

myjava |

||

| Note: 1856m is the task's memory limit (2GB) minus 192MB. | |||

| Kotlin | Kotlin 1.6.0 | kotlinc -d . "$@" |

mykotlinc |

kotlin -Dfile.encoding=UTF-8 -J-XX:+UseSerialGC -J-Xss128m -J-Xms1856m -J-Xmx1856m "$@" |

mykotlin |

||

| Note: 1856m is the task's memory limit (2GB) minus 192MB. | |||

| Python | pypy3 | pypy3 "$@" |

mypython3 |

Judging hardware

Compilation and execution as described above will take place in a “sandbox” on dedicated judging machines. The judging machines will be as identical as possible to, and at least as powerful as, the machines used by teams. The sandbox will allocate 2GB of memory; the entire program, including its runtime environment, must execute within this memory limit. For interpreted languages (Java, Python, and Kotlin) the runtime environment includes the interpreter (that is, the JVM for Java/Kotlin and the Python interpreter for Python).

The sandbox memory allocation size will be the same for all languages and all contest problems. For Java and Kotlin, the above commands show the stack size and heap size settings which will be used when the program is run in the sandbox.

Some hardware resources, including few of the servers, have been kindly provided by Politecnico di Milano's DataCloud.

Judging software

The software configuration for judge machines is based on an Ubuntu 20.04 64bit virtual machine with exactly the same software version as the team software above.

The contest control system that will be used is DOMjudge.

Submissions will be evaluated automatically unless something unexpected happens (system crash, error in a test case, etc.).

Verdicts are given in the following order:

- Too-late: This verdict is given if the submission was made after the end of the contest. This verdict does not lead to a time penalty.

- Compiler-error: This verdict is given if the contest control system failed to compile the submission. Warnings are not treated as errors. This verdict does not lead to a time penalty. Details of compilation errors will not be shown by the judging system. If your code compiles correctly in the client environment but leads to a Compiler-error verdict on the judge, contestants should submit a clarification request to the judges.

- The submission is then evaluated on several secret test cases in some fixed order. Each test case is independent, i.e., the time limits, memory limits, etc., apply to each individual test case. If the submission fails to process correctly a test case, then evaluation stops and an error verdict is returned (see next list), and a penalty of 20 minutes is added for the problem (which are only counted against the team if the problem is eventually solved). If a submission is rejected, no information will be provided about the number of the test case(s) where the submission failed.

- Correct: If the evaluation process completes and the submission has returned the correct answer on each secret test case following all requirements, then the submission is accepted. Note that this verdict may still be overridden manually by judges.

The following errors can be raised on a submission. The verdict returned is the one for the first test case where the submission has failed. The verdicts are as follows, in order of priority:

- Error verdicts where execution did not complete: the verdict returned will be the one of the first error amongst:

- Output-limit: The submission produced too much output.

- Run-error: The submission failed to execute properly on a test case (segmentation fault, divide by zero, exceeding the memory limit, etc.). Details of the error are not shown.

- Timelimit: The submission exceeded the time limit on one test case, which may indicate that your code went into an infinite loop or that the approach is not efficient enough.

- Error verdicts where execution completed but did not produce the correct answer: the verdict returned will be the first matching verdict amongst:

- No-output: There is at least one test case where the submission executed correctly but did not produce any result; and on other test cases, it executed properly and produced the correct output.

- Wrong-answer: The submission executed properly on a test case, but it did not produce the correct answer. Details are not specified.

Note that there is no "presentation-error" verdict: errors in the output format are treated as wrong answers.

Problem set

The problem set will be provided on paper (one copy per contestant), and also in PDF files on the judge system (allowing you to copy and paste the sample inputs and outputs). We may also provide an archive of the sample inputs and outputs to be used directly.

Making a submission

Once you have written code to solve a problem, you can submit it to the contest control system for evaluation.

Each team will be automatically logged into the contest control system.

You can submit using the web interface, by opening a web browser and using the provided links/bookmarks, or you can submit by command line using the submit program.

(If you use the submit program, make sure that the file that you wish to submit has the correct name, e.g., a.cpp, because submit uses this to determine automatically for which problem you are submitting.)

Asking questions

If a contestant has an issue with the problem set (e.g., it is ambiguous or incorrect), they can ask a question to the judges using the clarification request mechanism of DOMJudge. Usually, the judges will either decline to answer or issue a general clarification to all teams to clarify the meaning of the problem set or fix the error.

If a contestant has a technical issue with the team workstation (hardware malfunction, computer crash, etc.), they should ask a volunteer in their room for help.

Neither the judges nor the volunteers will answer any requests for technical support, e.g., debugging your code, understanding a compiler error, etc.

Printing

During the contest, teams will have the possibility to request printouts, e.g., of their code. These printouts will be delivered by volunteers. Printouts can be requested within reason, i.e., as long as the requested quantities do not negatively impact contest operations.

You can print using the web interface or by command line using the printout program.

(Make sure that the file that you wish to submit has the correct extension, because printout uses this to determine automatically which syntax highlighting to apply.)

Location and rooms

The exact location and rooms for the contest will be published soon.